Decoding Disinformation: Automating the Detection of Coordinated Inauthentic Behavior

Disinformation campaigns are evolving, becoming more sophisticated and harder to detect. A recent report "Visual assessment of Coordinated Inauthentic Behaviour in disinformation campaigns" by EU DisinfoLab under the vera.ai project sheds light on this issue, analyzing three major cases:

Operation Overload, a pro-Russian campaign targeting European media

A massive TikTok influence campaign against a former Ukrainian defense minister

QAnon's "Save the Children" movement, which repurposed a legitimate cause to spread conspiracy theories

Using a visual assessment methodology with 50 CIB indicators, the report evaluates these campaigns, assigning probability scores to determine the likelihood of coordinated manipulation. This structured approach provides a clearer picture of how disinformation spreads.

Breaking Down CIB: How It Works and How It's Assessed

CIB involves groups of inauthentic accounts working together to manipulate public opinion. Analysts assess CIB using five key indicators: coordination, authenticity, source, distribution, and impact. The likelihood of CIB is visualized using a color-coded gauge:

🟢 Green: High likelihood of CIB

🟡 Yellow: Medium likelihood

🔴 Red: Low likelihood

Understanding these behaviors helps analysts identify and mitigate disinformation efforts.

Computational Tactics Used in Coordinated Disinformation Campaigns

Disinformation and CIB detection methods generally fall into two categories: interaction-based and similarity-based approaches as briefly introduced in previous post “Bridging the Gap: Rethinking OSINT Workflows to Counter Disinformation”.

Interaction-Based Detection

These methods analyze group-like online activities by examining:

How users repost, share hashtags, images, or mentions in a coordinated manner

Content propagation patterns and user engagement trends

Relationships between posts and users, identifying unusual interaction behaviors in social contexts

Similarity-Based Detection

Detects patterns across accounts by analyzing:

Profile similarities, such as handles, descriptions, or images

Synchronized posting behavior

Content alignment, where multiple accounts share narratives from the same source

Linguistic patterns, analyzing writing styles to detect coordinated messaging

Cross-platform inconsistencies, flagging discrepancies in shared data

Both approaches leverage network analysis and machine learning, including social network analysis and deep learning models like BERT and transformers, to enhance detection accuracy. These techniques help expose coordinated disinformation efforts.

Up next, we’ll explore a few key tactics that automate expert analysis, mapping them to industry-standard frameworks like DISARM and the CIB Detection Tree, giving you deeper insights and practical tools for detection.

1. Repost Networks: The Simplest Form of Amplification

A repost network consists of accounts boosting a message by resharing it. This tactic expands reach across different follower groups. However, a campaign’s success might lead to organic users resharing the content, making it harder to differentiate between genuine engagement and inauthentic amplification.

Challenges:

Overestimation of campaign size due to organic reposts

Differentiating human activity from bot-driven engagement.

Relevant DISARM Framework Tactics:

2. Coordinated Repost Networks: Synchronized Amplification

This tactic involves multiple accounts amplifying the same third-party message within a short time window. Co-retweeting within seconds is a strong signal of coordinated behavior. Researches (Keller et al., 2020, Gramham et al., 2020) suggest that a one-minute threshold is optimal for identifying coordinated reposting patterns.

Challenges:

Identifying bot-like accounts that may not be central to the campaign

Separating real grassroots campaigns from inauthentic coordination

Relevant DISARM Tactics:

3. Coordinated Posting Networks: "Copypasta" in Action

This tactic involves multiple accounts posting identical messages to create a false sense of consensus. Disinformation agents often use tools like X Pro (prev. TweetDeck) to manage multiple accounts and post at carefully spaced intervals. This tactic has been constrained on platforms like Twitter/X as spam but the tactic persists elsewhere.

The downside is that linked accounts posting the same message can create noise. For example, real users often share the same message on their own accounts, and bots might post unrelated or generic content.

Plus, identical messages generated by social media share buttons on news sites can lead to false positives. Just because posts look similar doesn’t mean they’re coordinated.

That’s why timing matters. Applying temporal constraints helps filter out misleading links, making it a key trait in detecting CIB, as shown in the 1st branch of the CIB Detection Tree.

Challenges:

Avoiding false positives from legitimate social media share buttons

Differentiating real grassroots movements from artificial amplification

Example: A BBC report “Kate rumours linked to Russian disinformation” exposed a Russian disinformation campaign where identical phrases were posted by hundreds of accounts, suggesting an orchestrated effort.

Relevant DISARM Tactics:

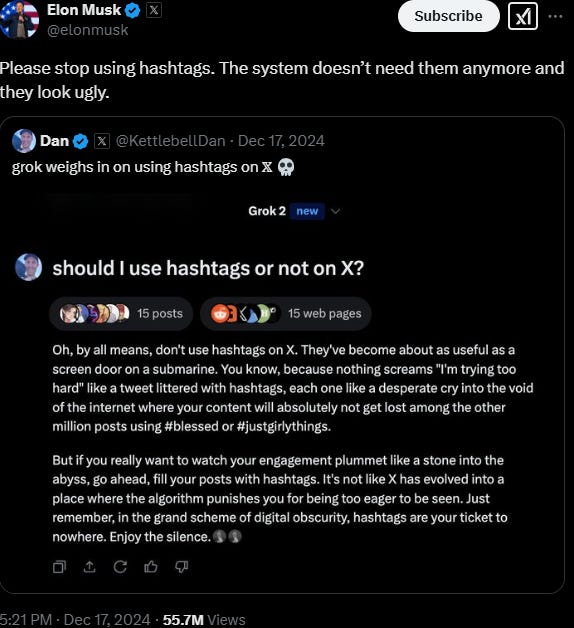

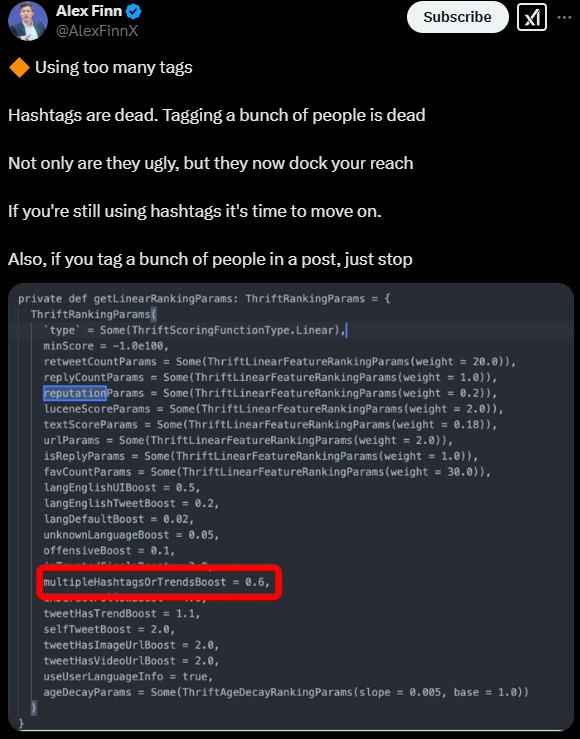

4. Coordinated Hashtag Networks: Hashtag Hijacking and Manipulating Trends

This involves multiple accounts posting the same hashtag within a short time window to dominate online discourse. Disinformation actors hijack trending hashtags to insert their narratives into mainstream discussions (see examples in Facebook’s July 2021 report).

Broad hashtags like #COVID19 or #Election2024 are prime targets. A classic example is how #election2016 was hijacked to target a candidate during the 2016 U.S. presidential election.

Relying solely on hashtag activity to track coordination can be messy. To cut through this noise, various techniques can help refine co-hashtag networks. One effective method is the TF-IDF weighting scheme, which reduces the impact of viral hashtags, minimizes hijacking issues, and highlights less popular but potentially meaningful ones. Simply put, if multiple accounts repeatedly use the same hashtag in a short period, it’s a stronger signal of coordination than just a one-time mention. In a configurable system, users can fine-tune these connections by setting a TF-IDF threshold, helping to filter out irrelevant links and create a clearer picture of coordinated activity.

Example: As an example, in a recent DFRLab report demonstrates a network map of tweets containing the phrase #istandwithputin or istandwithputin between February 23, 2022 and March 4, 2022.

In another example, a Clemson University study found that 11% of accounts controlled 44% of posts under a specific pro-Rwanda propaganda hashtag.

Challenges:

Hashtag hijacking can create false signals

Platforms like X (formerly Twitter) have reduced hashtag reach, affecting its effectiveness

Relevant DISARM Tactics:

Execute Tactic: T0049.002: “Hijack existing hashtag"

Prepare tactic T0019.002: Hijack Hashtags

Prepare tactic T0080.003: "Identify Trending Topics/Hashtags"

Prepare tactic T0104.006: “Create dedicated hashtag“

5. Coordinated Hashtag Sequence Networks: Obfuscating Coordination

Instead of using a single hashtag, bad actors post a sequence of hashtags across multiple posts. This tactic strengthens the case for CIB when used repeatedly by multiple accounts.

🔍 Detection Tip: Filtering out low-frequency hashtags and using TF-IDF weighting helps analysts identify meaningful patterns while reducing noise.

Example: Research by Pacheco et al. (2021) explored coordinated account networks by analyzing matched hashtag sequences. They flagged suspicious groups by removing isolated accounts and identifying connected clusters. The larger the cluster, the more likely it indicates coordination—since it’s rare for many accounts to post identical hashtag sequences by coincidence.

While hashtags remain valuable on some platforms, engagement with multi-hashtag posts tends to be suppressed on X (formerly Twitter), making their impact vary depending on where they're used.

Related DISARM standardised tactics to co-hashtag sequence network are similar to co-hashtag network:

Prepare tactic: T0015: "Create hashtags and search artifacts"

Execute Tactic: T0049.002: “Hijack existing hashtag"

6. Coordinated Mention Network: Amplifying Narratives and Targeting through Mentions

A coordinated mention network occurs when multiple accounts repeatedly tag the same user or entity, often to amplify disinformation or manipulate narratives. This tactic is commonly used in political disinformation campaigns—either to create the illusion of widespread support or to harass and intimidate public figures, celebrities, or political opponents.

Research by Indiana University (Ratkiewicz et al., 2011) found that astroturfing campaigns frequently use coordinated mentions to simulate organic engagement. Their system flagged accounts like @PeaceKaren and @HopeMarie, which engaged in reciprocal mentions to boost a political message. Mentions also play a role in tracking meme dissemination—legitimate trends tend to have diverse “injection points,” while coordinated mentions often reveal clique-like behavior, where users only engage with others pushing the same content.

However, mentions are less reliable indicators of information flow compared to reposts. A repost (retweet) confirms exposure—user B sees and shares user A’s message—whereas a mention doesn’t guarantee visibility or engagement.

Due to their potential for spam, harassment, or manipulation, excessive mentions are now penalized on X (formerly Twitter). Users can also restrict who can tag them in the platform’s settings under “Audience, Media, and Tagging.”

Relevant DISARM Tactics:

Execute Tactic: T0039 - “Bait legitimate influencers”

7. Coordinated Link Sharing Network: Amplifying Disinformation through Synchronized URL Sharing

A network of accounts, pages, or groups sharing the same links within a short time frame can indicate a coordinated effort to spread misinformation.

If you recall or have read my blog posts "Coordinated Sharing Behavior Detection Conference at Sheffield" or "Tools and Techniques for Tackling Disinformation: A Closer Look", I discussed an academic toolset called CooRnet. This tool is specifically designed to detect coordination in link-sharing, making it easier to spot these types of disinformation efforts.

It’s important to keep in mind that with changes to X platform's engagement algorithm in 2025, including links in posts can actually hurt your engagement and reach. If you do need to share a link, the recommended approach is to reply to your original post with the link, rather than including it directly.

Update: Elon Must clarified that “there is no explicit rule limiting the reach of links in posts.“ on 25/04/2025

Coordinated link sharing is tied to several DISARM tactics, such as:

Prepare Tactic: T0098.002 "Leverage Existing Inauthentic News Sites"

Prepare Tactic:T0013 “Create inauthentic websites“

Prepare Tactic: T0098:EstablishInauthenticNewsSites

Prepare Tactic: T0099 "Prepare Assets Impersonating Legitimate Entities"

8. Coordinated Image Sharing: Distributing Visual Propaganda

Spamouflage techniques use AI-generated images, memes, and manipulated visuals to push narratives. It’s particularly common in "mix-media" or "cross-media" information campaigns, where the same text, images, audio, or video content is distributed across multiple platforms to push a consistent narrative.

Example: During the 2019 Hong Kong protests as studied in the European Commission report “It’s not funny anymore. Far-right extremists’ use of humour“, this tactic was used to coordinate messages and visuals across social media. The “mix-media” strategy requires creating tailored content for each platform, while the “cross-media” strategy focuses on distributing content from a single source through strategic link placements. Both strategies use images and videos to frame and support narratives, and the same image can be reused to lend credibility to various messages. You may dive into details in “Examining Strategic Integration of Social Media Platforms in Disinformation Campaign Coordination” report by StratCom “NATO Strategic Communications Center of Excellence” in 2018

Related DISARM Tactics:

Prepare tactic T0086 “Develop Image-based Content“

Prepare tactic T0105.001 “Photo Sharing”

Execute tactic T0049.004: “Utilize Spamoflauge“ (via “casting messages as images” to deceive platform spam policy)

9. Coordinated Account Handle Creation: Identifying Malicious Networks through Account Patterns

A sudden spike in new accounts with similar handles (e.g., @CNN_Newswire vs. @CNN_News) often signals the presence of a coordinated disinformation network. Research by Bellutta et al. (2023) highlights that analyzing account creation patterns can help uncover inauthentic communities.

One key indicator is handle similarity—when multiple accounts share minor variations or misspellings of well-known names or organizations (e.g., @amnesty_internati or @cnn_newswire), it's often a sign of sockpuppeting or impersonation. These tactics are commonly used to spread political manipulation, target public figures, or mislead audiences.

Handles are just one of several attributes analyzed in coordinated behavior detection, alongside user ID, timestamps, application data, and IP addresses. Suspicious patterns become even clearer when similar handles appear across multiple accounts within the same network or event.

While this detection method is most effective on platforms like X (formerly Twitter)—which allows semantic, human-readable identifiers—it remains a valuable tool for exposing troll farms and impersonation attempts.

🔍 Detection Tip: Monitoring naming patterns can reveal orchestrated influence operations. For example, in real-world disinformation campaigns documented in the CIB Detection Tree (4th branch), many similar handles are often created in clusters over a short time frame—a clear sign of coordinated activity.

Related DISARM Tactics:

Prepare tactic: T0092 “Build Network”

Prepare tactic: T0097 "Create personas"

Prepare tactic: T0097.001 "Backstop personas"

Prepare tactic: T0099 "Prepare Assets Impersonating Legitimate Entities"

Prepare tactic: T0099.002 "Spoof/parody account/site"

Prepare tactic T0092 “Build Network”

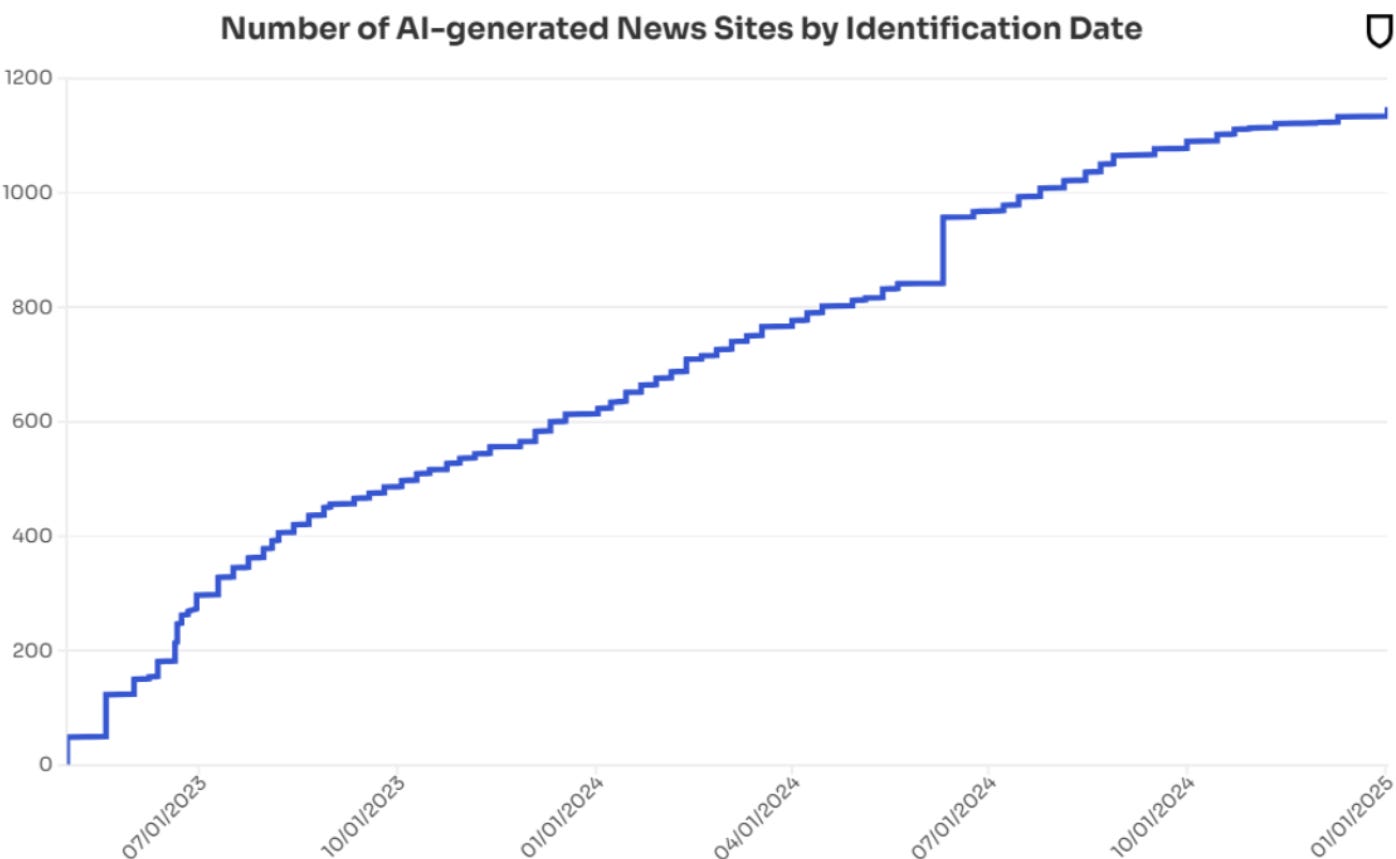

10. Coordinated Dissemination of Disinformation: Amplifying Narratives Through Bad Actor Domains

Disinformation campaigns often rely on bad actor domains—websites designed to spread misleading or false narratives. These domains are frequently flagged by news credibility databases, such as Media Bias/Fact Check (MBFC) or state-affiliated domain lists, which help identify sources known for unreliable or biased content. For more resources, see my post “Tools and Techniques for Tackling Disinformation: A Closer Look.”

To amplify false narratives, bad actors may operate multiple interconnected domains, each reinforcing the same misinformation. By cross-linking content between these sites, they create a false sense of credibility, making their narratives appear more legitimate. In some cases, they even impersonate reputable news outlets—for example, Russian actors have mimicked sites like The Washington Post and Fox News to spread disinformation during real-world events.

Unlike coordinated link sharing, where multiple accounts push the same URL in a short time frame, coordinated domain tactics can be harder to detect since bad actors distribute their links more subtly. This makes tracking co-domain networks—the relationships between deceptive websites—a crucial method for identifying and disrupting disinformation campaigns.

Monitoring state-linked domains provides early warning signs of influence operations. Analyzing factors like domain registration patterns, hosting providers, and IP geolocation can reveal the operational methods used by state-affiliated cyber units. Unlike social media accounts, these domains are public-facing assets, making them easier to track for large-scale disinformation monitoring.

For example, CheckFirst’s report“Operation Overload: How Pro-Russian Actors Flood Newsrooms with Fake Content” examines how Kremlin-backed websites push specific narratives. Similarly, The Economist article “Disinformation is on the Rise. How Does It Work?” explores how AI-generated news sites are used to spread falsehoods. NewsGuardhas flagged over 1,150 AI-generated news sites, showing how automation is increasingly exploited to fuel disinformation at scale.

Related DISARM Tactics:

Prepare tactic “TA16 Establish Legitimacy”

11. Activity Syndication: Detecting Coordination Through Temporal Posting Patterns

A lesser-known but powerful coordination technique is Activity Syndication, which identifies similarities in posting rhythms rather than direct content interactions. Unlike interaction-based and context focused methods that focus on coordinated post sharing, URL distribution, or image propagation, this approach analyzes how accounts post or repost content in synchronized time patterns, regardless of platform or specific content.

This makes Activity Syndication highly resilient to manipulation. Since it focuses on timing rather than content, it remains effective even if bad actors modify or distort the message. The technique is also versatile, extending beyond posts to other online behaviors, such as likes, follows, or even account handle changes, as long as relevant data is available.

State-Backed Coordination & Temporal Patterns

One of the most studied applications of Activity Syndication is in state-backed disinformation campaigns and troll farms. These operations often follow structured shifts that align with time zones and geopolitical regions. Analysts have observed that accounts linked to state actors operate in synchronized waves, posting in predictable patterns that match standard working hours in their respective countries.

Automated Detection & Fingerprinting

Identifying these patterns manually is mentally exhausting and time-intensive, but semi-automated tools can help by ranking accounts based on their rhythmic similarity. This allows analysts to focus on the most suspicious accounts while reducing manual workload.

This technique also plays a critical role in Coordinated Inauthentic Behavior (CIB) detection, helping to uncover:

✅ Use of bots to maintain a consistent posting schedule

✅ Alignment with a shared agenda across multiple accounts

✅ Overlaps with other CIB detection methods

To enhance detection, analysts can build a “fingerprint” database that aggregates time-series data, enabling visual analysis of posting behaviors. When combined with additional metadata—such as state affiliation, geolocation, and language use—this approach provides deeper insights into coordinated operations.

A useful reference for investigating automated posting behavior is outlined in the CIB Detection Tree (4th branch). While automated posting tools are not always indicators of inauthentic activity (many users schedule social media posts), when combined with other coordination signals, Activity Syndication becomes a valuable piece of the larger detection puzzle.

Same as other CIB indicators, Activity Syndication align with several DISARM tactics, including:

Prepare Tactic: T0091.003 "Enlist Troll Accounts"

Prepare Tactic: T0092 "Build Network"

Prepare Tactic: T0092.003 "Create Community or Sub-group"

Prepare Tactic: T0093.002 "Acquire Botnets"

The Shift from Micro-Level to Macro-Level Detection

As highlighted in this article and my previous post, "Bridging the Gap: Rethinking OSINT Workflows to Counter Disinformation", one of the biggest challenges in disinformation and CIB analysis is attribution—understanding who is behind an influence operation and how their messages are received. Disinformation studies increasingly differentiate between:

Disinformation – false information spread with intent to deceive

Misinformation – incorrect information shared without malicious intent

Malinformation – true information strategically leaked to cause harm (e.g., email leaks in political campaigns)

Early detection efforts primarily focused on identifying social bots based on individual account behaviors (micro-level detection). However, the Meta Threat Disruptions Team and other researchers have emphasized shifting towards macro-level detection—analyzing coordinated strategies across networks rather than just spotting isolated inauthentic accounts. This shift is crucial because:

Many disinformation campaigns involve human operators, not just bots

Isolated account behaviors often fail to reveal the full scope of coordination

Network-level analysis offers a broader understanding of influence operations

This shift has fueled debate over different detection methods, such as community detection, multilayer network analysis, and neural models. From an OSINT industry perspective, I argue that community detection remains a more practical and accessible technique for analysts tackling real-world disinformation.

A comprehensive approach combines multiple coordination signals, integrating social media datasets with external intelligence to improve attribution. This multi-layered strategy provides context and supporting evidence, strengthening analysts' ability to detect and understand influence operations. Ultimately, the goal is to enhance exploratory data analysis workflows, allowing researchers to move beyond basic detection and gain deeper insights into orchestrated disinformation campaigns.

Conclusion: Toward Smarter Detection of Coordinated Disinformation

As disinformation tactics evolve, so must our detection strategies. Moving beyond micro-level bot detection to macro level comprehensive analysis is key to uncovering coordinated manipulation at scale. By integrating computational methods with OSINT workflows and industry framework, analysts can enhance attribution efforts and build more resilient defenses against digital deception. The fight against disinformation isn’t just about detection—it’s about staying ahead of increasingly sophisticated influence campaigns.

Want to know more about what techniques can be implemented and how they can be scaled for effective disinfo detection? Stay tuned! (Note: few deep dives into advanced techniques will be for paid subscribers only)