Tools and Techniques for Tackling Disinformation: A closer look

In my previous post, “Coordinated Sharing Behavior Detection Conference at Sheffield”, I delved into the challenges posed by the gap between academic research and industry needs in tackling disinformation. You might be wondering: Are there practical tools out there—whether commercial solutions or open-source platforms—that effectively monitor and detect disinformation events?

This follow-up post aims to explore the tools available today, ranging from open-source resources, academic initiatives to advanced commercial solutions. The goal is to equip you with insights into how these technologies are shaping our ability to identify and combat disinformation.

Academic and Open-Source Tools

vera.ai: InVID/WeVerify Verification Plugin

The InVID browser plugin (details here) and WeVerify platform (details here) are excellent tools developed under the EU-funded vera.ai project. Their goal? To build trust in digital spaces and promote media literacy. These tools offer a range of features to help users verify online content, particularly from social media.

Key Features:

Reverse Image Search: Identify where an image originates and check for manipulations, reused content, or deepfakes.

Video Analysis: Tools for extracting keyframes, comparing frames, and verifying timecodes make spotting edited videos easier.

Social Media Insights: WeVerify uncovers valuable metadata—like account creation dates and posting activity—and even analyzes propagation patterns and community connections, including early signals on fringe platforms like 4chan or 8chan.

Why It’s Valuable: These user-friendly browser extensions provide real-time verification tools for on-the-go analysis, empowering users to tackle misinformation during their web browsing or social media activity.

For a closer look, visit the InVID verification plugin page and their social media account for latest update (@veraai_eu, @jospang, @jospang.bsky.social, and prev. ones including @WeVerify and @InVID_EU).

Hamilton 2.0 Dashboard

The Hamilton 2.0 Dashboard (prev. Known as Hamilton 68 Dashboard), run by the Alliance for Securing Democracy, provides insights into narratives promoted by state-backed media outlets and government officials from Russia, China, and Iran. It monitors platforms like Telegram, YouTube, and Facebook, as well as official statements and press releases.

While its analysis of Twitter (now X) data is no longer current due to policy changes, its Social Data Search tool offers access to historical data. However, note that the dashboard has occasionally flagged legitimate accounts in error (as seen in the controversies in the past), so its findings come with disclaimers.

Stay updated with their latest insights by following them on social media at @SecureDemocracy

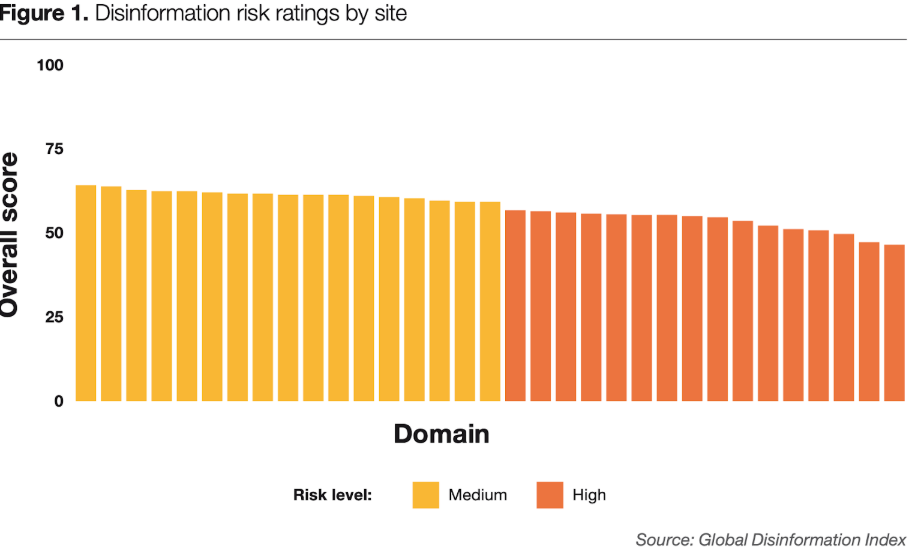

Global Disinformation Index (GDI)

The Global Disinformation Index (learn more) is not a direct detection tool but provides valuable research for combating disinformation. Its focus is on disrupting the economic incentives of misinformation through comprehensive risk assessments.

Highlights:

Media Market Risk Assessments: Evaluate news domains for disinformation risks.

Country-Specific Reports: Cover disinformation in regions like Thailand, Bangladesh, and Japan.

Dynamic Exclusions List (DEL): Identifies and updates the worst offenders globally, helping ad tech platforms reduce the funding for these sources.

While GDI primarily supports research and policymaking, its tools are invaluable for understanding global disinformation trends.

Criticism of GDI's Practices

It is worth noting that the GDI has faced notable criticism, with allegations suggesting that it seeks to discredit certain news organizations it deems unfavorable. Reports indicate that its efforts may extend to undermining the revenue streams of these outlets as a way to constrain their operations. These actions have sparked ethical and operational debates among stakeholders, particularly given the organization's funding sources, which include NATO governments (e.g., UK FCDO from 2019–2022), organizations such as UnHerd, and DEL data licenses. This has led to heightened scrutiny of GDI’s methods and their broader implications.

State Media Monitor

The State Media Monitor, developed by the Media and Journalism Research Center, is a standout resource for analyzing the editorial independence—or lack thereof—of media outlets linked to governments worldwide. This tool offers access to the most comprehensive state media database ever created. Data collection for this project has been ongoing since 2004, under the leadership of Marius Dragomir, a seasoned journalist, media scholar, and the center’s director. Over the years, more than 600 researchers have contributed to this extensive dataset, which has supported initiatives such as Television Across Europe, Mapping Digital Media, and the Media Influence Matrix.

One of its key strengths lies in the annual updates conducted by a consortium of media experts, ensuring that the database reflects the latest shifts in global media landscapes. Updates typically roll out in September or October, providing fresh insights for practitioners and researchers alike. For OSINT professionals, the State Media Monitor is an invaluable resource for understanding the political and economic contexts of state-affiliated media, helping to uncover potential biases and influence campaigns across the globe. This is important when considering Twitter drops ‘state-affiliated’, ‘government-funded’ labels since 2023.

Open Measures (formerly SMAT)

Open Measures provides an open-source Social Media Analysis Toolkit that’s ideal for investigating coordinated disinformation campaigns, particularly on fringe platforms like 4chan, Gab, and Parler.

Key Advantage: This tool includes a public API, making it accessible for researchers, journalists, and activists, regardless of budget constraints. It's a valuable resource for studying coordinated behavior on fringe platforms.

Communalytic

Communalytic is a robust research tool designed for analyzing social media data from platforms like Reddit, Telegram, YouTube, Facebook, and Twitter.

Features:

Network Analysis: Generate signed and unsigned networks, including link-sharing networks and reply-to networks.

A signed network is a network with edges that contains additional information such as positive or negative signs or scores (weights). To turn a network into a signed network in Communalytic, users have the option to run a couple of additional analyses (toxicity and/or sentiment) prior to creating a network representation of their dataset. The resulting toxicity scores and/or sentiment polarity scores would be added as weights to edges in the network and visualized for easier exploration and analysis. This feature can be used to identify and visually highlight interactions of interest (e.g., anti-social interactions) within the network.

Content Analysis: Examine datasets for patterns in communication or link-sharing activity.

Reference

Gruzd, A., Mai, P., & Soares, F. B. (2023, September). From Trolling to Cyberbullying: Using Machine Learning and Network Analysis to Study Anti-Social Behavior on Social Media. In Proceedings of the 34th ACM Conference on Hypertext and Social Media (pp. 1-2). Access via https://dl.acm.org/doi/abs/10.1145/3603163.3610531 (pdf)

CooRTweet

Developed by Dr. Graham at Queensland University, this Python-based toolkit identifies coordination networks in social media data. Its features include detecting co-retweets, identical text sharing, co-links, and co-replies.

See docs:

https://github.com/QUT-Digital-Observatory/coordination-network-toolkit/blob/main/docs/tutorial.md

https://researchdata.edu.au/coordination-network-toolkit/1770792

CooRnet

An R package by Prof. Giglietto at Università degli Studi di Urbino Carlo Bo, CooRnet detects Coordinated Link Sharing Behavior (CLSB) using CrowdTangle or MediaCloud data. It’s ideal for identifying coordination in link-sharing, with applications demonstrated during the 2018–2019 Italian elections at ECREA 2021.

Find_hccs

Developed by Derek Weber at The University of Adelaide, this Python library via their paper in 2021 uncovers highly coordinated communities (HCCs) on social media. It employs methods like co-retweets, co-hashtags, and co-mentions to reveal latent coordination networks.

This project provides a pipeline of processing steps to uncover highly coordinating communities (HCCs) within latent coordination networks (LCNs) built through inferring connections between social media actors based on their behaviour.

Three detection methods are implemented including Boost (via co-retweets), Pollute (via co-hashtags), and Bully (via co-mentions).

Commercial solutions

NexusXplore by OSINT Combine: An All-in-One OSINT Solution

If you're an OSINT analyst looking for a versatile tool, NexusXplore is a name you’ll likely encounter. This AI-enabled software platform, designed by intelligence experts at OSINT Combine, consolidates search and analysis across the surface, deep, and dark web—all within a single interface.

NexusXplore is praised for its streamlined workflows, enabling analysts to quickly extract actionable insights. Whether you’re uncovering threats or producing intelligence reports, its browser-based ecosystem makes it accessible with just an internet connection.

Why it’s Effective:

Advanced search and data collection capabilities across surface, deep and dark webs in a single user interface.

Integration of thousands of datasets into a secure, intuitive platform.

Significant support for low-attribution, high-productivity investigations.

However, some users point out a learning curve and resource demands, which may challenge newcomers or organizations with limited budgets.

i2 Analyst’s Notebook: Visual Analysis at Its Best

For analysts tackling disinformation campaigns or investigating criminal activities, i2 Analyst’s Notebook has been a trusted choice. Known for its exceptional visualization and link analysis capabilities, this tool simplifies the process of mapping relationships, patterns, and trends.

What Sets It Apart:

Dynamic visualizations of complex data relationships.

Seamless integration with various data sources, including social media and internal databases.

Customizable workflows for tailored investigations.

While its advanced features are powerful, the steep learning curve and high costs can be limiting factors for smaller teams. Additionally, it requires well-structured input data for optimal results.

From tracking the evolution of disinformation narratives to uncovering financial crimes, i2 proves invaluable across diverse investigative contexts.

Ariadne by Valent Project: AI-Driven Disinformation Detection

Valent Project’s Ariadne system brings AI to the forefront of disinformation analysis. Designed to detect, predict, and respond to online manipulation, Ariadne excels at narrative extraction, sentiment analysis, and tracking inauthentic activity.

Core Strengths:

Detects malicious activities, including bot-driven campaigns.

Tracks the evolution of disinformation narratives over time.

Integrates threat intelligence for context-rich analysis.

Generates customized, data-driven reports.

Despite its promise, Ariadne's effectiveness depends on the breadth of its data sources. Currently, it appears to focus more on major platforms, with limited coverage of fringe networks.

Reference

"Disinformation is on the rise. How does it work?" The Economist, May 1, 2024. Retrieved from https://archive.ph/TUbje

Google Jigsaw Assembler: Experimental Disinformation Defense

Launched in 2020, Google Jigsaw’s Assembler platform takes an experimental approach to identifying manipulated media. With tools for deepfake detection and coordinated disinformation/network analysis, it’s aimed at helping newsrooms and researchers stay ahead of evolving manipulation techniques.

Key Features:

Uses multiple detectors to analyze media for various types of manipulation.

Focuses on AI-generated content, including deepfake detection.

Provides collaboration opportunities for researchers developing detection technologies.

Still in its testing phase, Assembler’s impact is yet to be fully realized. Early adopters appreciate its potential but acknowledge the challenge of keeping pace with sophisticated manipulation tactics.

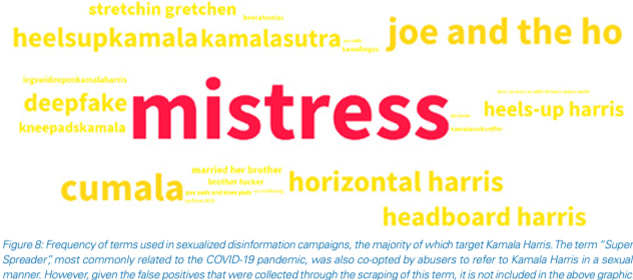

Moonshot: Combining Technology with Expertise

Formerly Moonshot CVE, Moonshot takes a comprehensive approach to countering disinformation, leveraging real-time threat monitoring and custom research. Known for its Redirect Method, which redirects users seeking extremist or false content to credible alternatives, Moonshot offers tailored solutions for tackling disinformation networks.

Why It Works:

Monitors online threats across platforms and languages in real-time.

Builds audience analysis tools to counter disinformation and conspiracy theories.

Partners with organizations to address specific threats, from election integrity to pandemic-related falsehoods.

In practice, Moonshot has uncovered insights like the rise in anti-Chinese hashtags during COVID-19 and gender-based disinformation campaigns targeting prominent figures. Its dual focus on technological innovation and human expertise positions it as a leader in online threat mitigation.

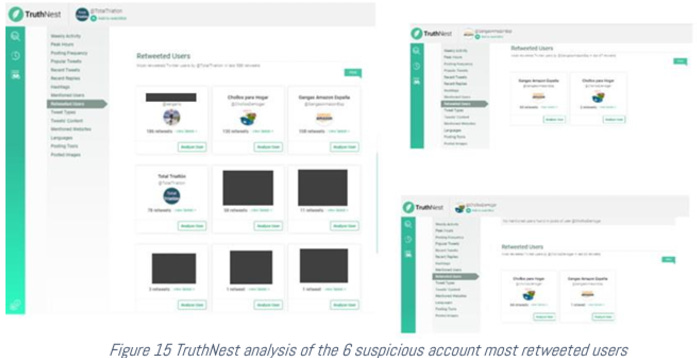

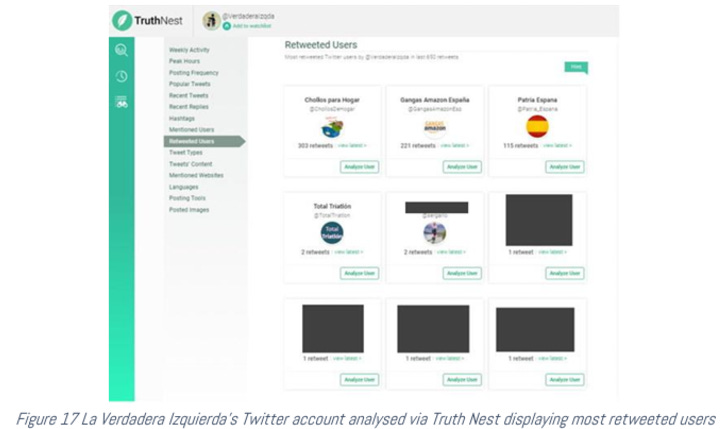

TruthNest: Analyzing Social Media and Networks

TruthNest is a platform specifically tailored for analyzing and detecting disinformation, focusing on social media data and network behavior.

Key Features:

Content Moderation: Assists in identifying and flagging harmful or misleading content.

Trend Tracking: Monitors emerging topics and trends, enabling early detection of potential disinformation campaigns.

Sentiment Analysis: Evaluates public sentiment around specific topics to uncover manipulation attempts.

Network Analysis:

Identifies bots, influencers, and coordinated inauthentic behavior (CIB).

Maps disinformation networks, visualizing connections between key players and "super spreaders."

Provides actionable insights for countering disinformation campaigns.

Case Study: Monetization of Disinformation

In the EU Disinfo Lab’s report, The Monetization of Disinformation through Amazon, TruthNest was instrumental in uncovering a network of accounts operated by the same entity. The platform's cross-analysis revealed strong ties between the accounts, shedding light on coordinated activities.

While TruthNest offers robust tools for analyzing disinformation, its algorithms and data sources remain proprietary, primarily catering to businesses and organizations.

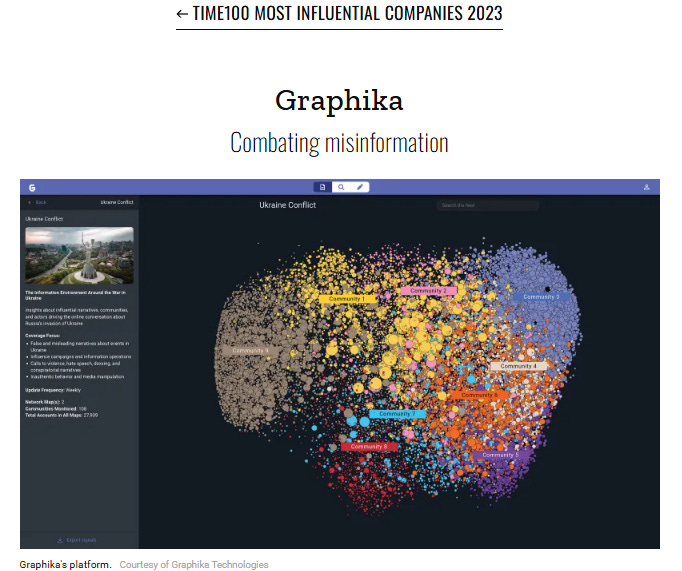

Graphika: Visualizing Information Networks

Graphika is a cutting-edge platform for analyzing and visualizing social media networks, particularly their role in spreading disinformation. Recognized as one of Time's 100 most influential companies in 2023, Graphika stands out for its ability to untangle the complexities of online interactions.

Key Offerings:

Large Data Sets: Gathers and processes extensive datasets from platforms like Twitter, Facebook, and Reddit.

Influencer and Community Mapping: Identifies key actors and communities driving disinformation narratives.

Conversation Tracking: Traces how disinformation spreads, tracking threads, memes, and topics over time.

Network Visualization: Creates detailed maps showing connections between accounts, hashtags, and topics to detect manipulation.

Bot and Troll Detection: Pinpoints automated and fake accounts fueling disinformation.

Disinformation Campaign Analysis: Tracks the lifecycle of disinformation efforts, using proprietary “Coordination Framework technology” that analyzes network, semantic, and temporal dimensions.

With its robust capabilities, Graphika excels in revealing how disinformation spreads and evolves, making it invaluable for researchers and analysts.

Blackbird.ai: Narrative Intelligence Redefined

Blackbird.AI leverages artificial intelligence to detect and counteract disinformation and narrative manipulation. Their flagship platform, Constellation Narrative Intelligence, combines advanced analytics with intuitive tools for combating harmful narratives.

Standout Features:

Narrative Intelligence: Tracks the emergence and spread of narratives across social media platforms, profiling potential disinformation campaigns.

Network Visualization: Highlights how harmful narratives propagate, identifying bot and troll activity alongside influential cohorts.

Actor Intelligence: Maps key influencers and coalitions driving specific narratives.

Real-Time Monitoring: Provides continuous updates on potential threats, enabling rapid responses.

Risk Intelligence: Assesses the potential impact of disinformation campaigns, offering actionable insights to mitigate risks.

Advanced Technologies:

Blackbird.AI recently launched Compass Vision, a tool designed to detect AI-generated and manipulated content like deepfakes. Complementing this is the RAV3N Risk LLM, a purpose-built AI model capable of analyzing global narratives in real-time. This technology provides automated narrative feeds, custom threat reports, and context-aware analysis, making it a powerful ally in the fight against disinformation.

Use Cases:

From protecting brand reputations to countering deepfake imagery, Blackbird.AI has demonstrated its impact across various industries. Their solutions empower organizations to detect and address harmful narratives before significant damage occurs.

Fivecast: A Comprehensive OSINT Solution

Founded in 2017 in Australia, Fivecast provides open-source intelligence (OSINT) tools for national security, defense, law enforcement, and financial intelligence sectors. Its flagship product, Fivecast ONYX, offers end-to-end intelligence solutions with cutting-edge features:

Data Coverage: Access billions of social media accounts, people records, companies, and even dark web data. The targeted data collection is a big deal also in terms of managed attribution in the OSINT process.

Network Analysis: Uncover connections between key players, groups, and channels spreading disinformation. See use case here.

AI-Driven Risk Detection: Detect disinformation narratives and prioritize threats using customizable AI/ML tools.

Global Reach: Monitor content in over 100 languages, with tools for dark web and geopolitical threat analysis.

From mapping adversarial networks to identifying influencers in manipulative campaigns, Fivecast delivers an advanced toolkit for tackling disinformation. Learn more about its capabilities here.

Zignal Labs: Real-Time Narrative Intelligence

Zignal Labs specializes in real-time analysis of online narratives, helping organizations protect their people, places, and reputations. Its platform processes over 8 billion daily data points across 50M+ sources, offering features like:

Customizable Visualizations: Tailor insights with over 80 visualization options.

AI-Powered Analysis: Detect complex disinformation patterns with advanced AI/ML models.

Geospatial and Sentiment Analysis: Monitor threats and narrative spreads across regions and demographics. See details in case studies of sentiment analysis and influencer identification.

Zignal’s holistic approach combines data collection, rapid threat detection, and actionable insights, positioning it as a leader in disinformation defense. For an in-depth look, visit Zignal Labs.

Buster.AI: Human-AI Collaboration Against Misinformation

Designed to combat disinformation with precision, Buster.AI employs deep learning algorithms to analyze vast datasets and assess content credibility. Its standout features include:

Rapid Verification: Quickly analyze claims and detect manipulations, including deepfakes.

Dynamic Data Repository: Continuously refreshed global data streams ensure up-to-date analyses.

Actionable Intelligence: Transform complex data into concise, actionable insights for financial and media sectors.

Buster.AI emphasizes the synergy between AI and human expertise, empowering decision-makers to counter disinformation effectively. Learn more of their vision of disinformation in this blog post.

Final Thoughts

The fight against disinformation has made significant strides, with a variety of tools and solutions now available to detect falsehoods, monitor campaigns, and provide valuable insights. These technologies excel in detecting harmful narratives at scale, analyzing data patterns, and offering actionable intelligence for countering manipulation. Their common strengths lie in their ability to process vast amounts of information, track the evolution of disinformation, and enable real-time responses through advanced analytics and visualization tools.

However, technology alone cannot solve the complex issue of disinformation. As highlighted in AI Startups and the Fight Against Mis/Disinformation Online: An Update (Schiffrin et al., 2022), humans remain indispensable in this battle. While AI applications have grown more nuanced and sophisticated since 2019, their limitations in recognizing context, intent, and cultural nuances underscore the need for human oversight. This dual reliance on technology and human intervention reflects a broader truth: disinformation is not merely a technological challenge but a symptom of deeper political, economic, and societal issues.

The business landscape for disinformation solutions has also faced hurdles. The market for business-to-consumer (B2C) solutions remains limited, and financial incentives for social media platforms to address disinformation are often misaligned. Notably, many start-ups in this space have been acquired or shuttered, as seen with companies like “Crisp Thinking”, “Yonder”, “Factmata”, and “L1ght”.

Moreover, a collaborative, multi-stakeholder approach is essential. Combating disinformation requires the combined efforts of policymakers, regulators, social media platforms, journalists, and public accountability actors such as academics and civil society. Policies must strike a delicate balance between curbing harmful content and preserving democratic values, such as free speech.

Looking ahead, the path forward involves addressing the structural barriers that allow disinformation to thrive. Expanding the scope of tools to cover diverse platforms and languages, ensuring ethical and unbiased AI, and fostering partnerships between technology providers and human experts will be critical. Ultimately, the challenge of disinformation cannot be solved by any single tool or sector. Only through collective innovation, accountability, and shared responsibility can we build a more resilient digital environment that fosters trust and truth in an increasingly interconnected world.

What tools or strategy have you found effective in combating disinformation?

More References:

Coene, Guy, and Evangelos Konstantinis. "Benchmarking exercise and identification of AI tool to detect false information on food." EFSA Supporting Publications 22.2 (2025): 9261E. access via the link