Bridging the Gap: Rethinking OSINT Workflows to Counter Disinformation

By examining from first principles, we can begin to answer deeper questions

If you’ve read my previous posts on the disconnect between academic research and industry practices in tackling disinformation—or the tools and resources available to combat it—you likely have a foundational understanding of the common features, strengths, and capabilities across these tools. You might also have reflected on the challenges practitioners face and considered future directions for improvement.

One challenge that comes up repeatedly is user experience (UX). What should the product interface look like? How can a tool seamlessly integrate into an analyst’s workflow? And how do we ensure human-in-the-loop processes are supported effectively?

But let’s take a step back and ask a fundamental question: What core capabilities do OSINT (Open Source Intelligence) practitioners need to effectively monitor and counter disinformation campaigns? By exploring this question, we can identify deeper insights, such as how tools can balance functionality and simplicity without sacrificing depth or accuracy.

In this post, I’ll walk you through how OSINT analysts approach disinformation analysis in real-world scenarios. We’ll break down their workflows, examine their challenges, and explore how these insights can inspire better tools to scale human efforts against the growing threat of disinformation.

How OSINT Analysts Work: The Disinformation Analysis Workflow

At its core, the disinformation analysis process follows a structured workflow. This isn’t just a linear checklist—it’s iterative, flexible, and constantly refined based on new insights. Here’s a simplified version of the process:

Understanding Requirements: Start with a clear scope and objectives for the investigation.

Collection Planning: Create a strategy to systematically gather relevant intelligence data.

Data Collection: Collect data, adjust filters, and refine the process to eliminate noise and irrelevance.

Exploitation and Enrichment: Extract meaningful insights and enhance data to answer key questions.

Analysis: Identify and map key patterns, such as coordinated inauthentic behavior (CIB).

Dissemination: Share findings through structured reports, often using frameworks like the EU Disarm framework or Meta’s Online Operations Kill Chain.

Let’s make this more concrete with an example.

Imagine monitoring geopolitical events ”Naval warfare in the Russian invasion of Ukraine” with keywords like “navy”, “Black Sea Fleet”. Initial searches might return thousands of posts, but many could be irrelevant—such as fans discussing “Navy Admirals” from the anime One Piece. Analysts would need to refine filters and iteratively remove this noise. This process of hypothesis testing, validation, and refinement continues until the collected data meets a quality threshold. Only then can deeper analysis, such as detecting coordinated campaigns, begin.

The key takeaway? Every stage—whether planning, collection, or analysis—can loop back to earlier steps as new insights emerge. It’s a dynamic, adaptive process that reflects the complexity of countering disinformation in real time.

The Role of Domain Expertise in FIMI Analysis

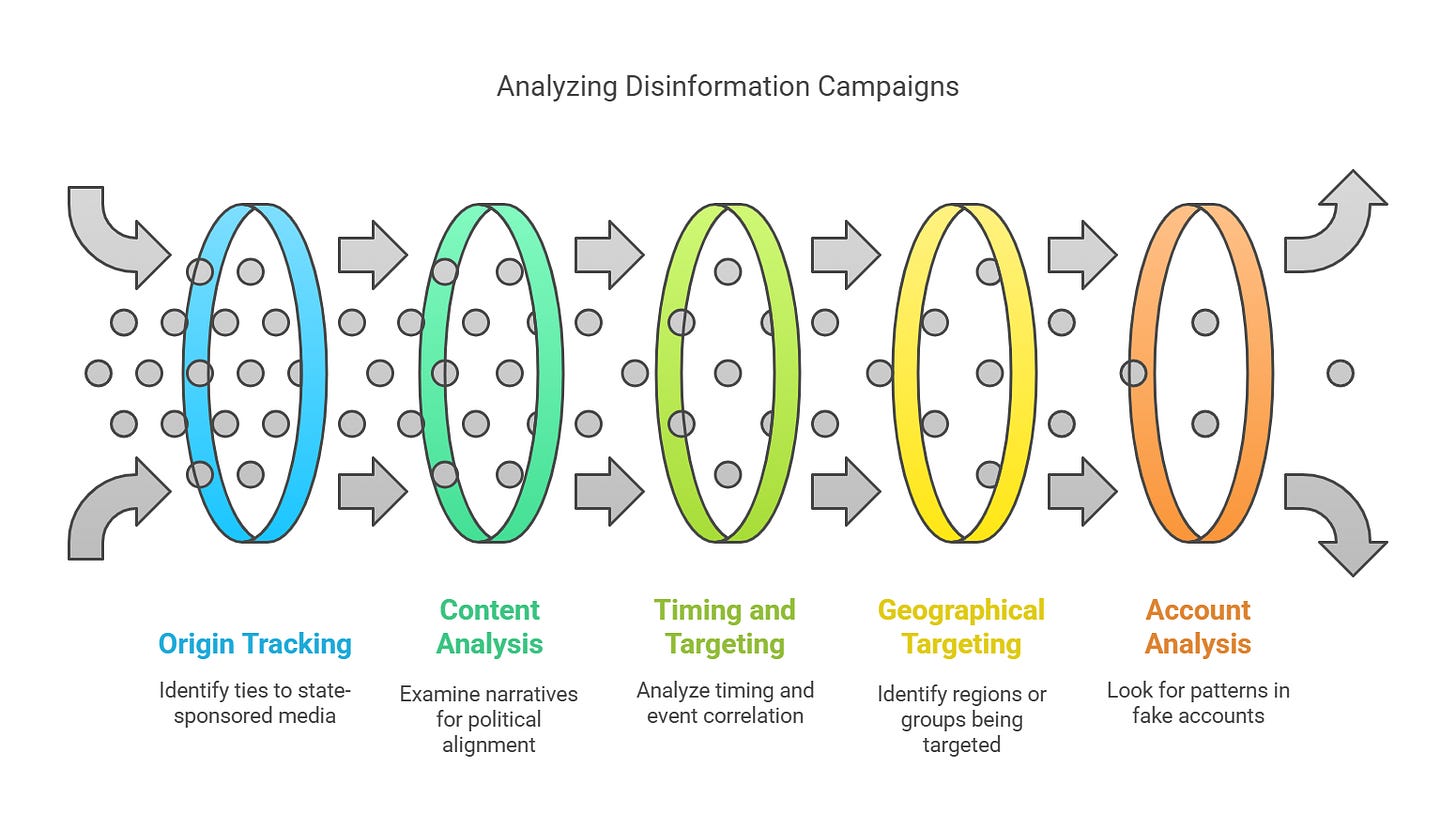

When countering Foreign Information Manipulation and Interference (FIMI), domain expertise is critical. Analysts rely on a combination of investigative techniques, structured workflows, and frameworks to tackle state-sponsored disinformation campaigns. Here are some common practices in the OSINT community for detecting and analyzing CIB:

Origin Tracking: Identify ties to state-sponsored media or individuals. For example, Russian outlets like RT and Sputnik play pivotal roles in wartime propaganda.

Content Analysis: Examine whether narratives align with a state’s political agenda. For instance, China’s messaging often undermines protests in Hong Kong.

Timing and Targeting: Disinformation campaigns frequently coincide with elections, protests, or other key events, revealing their strategic intent.

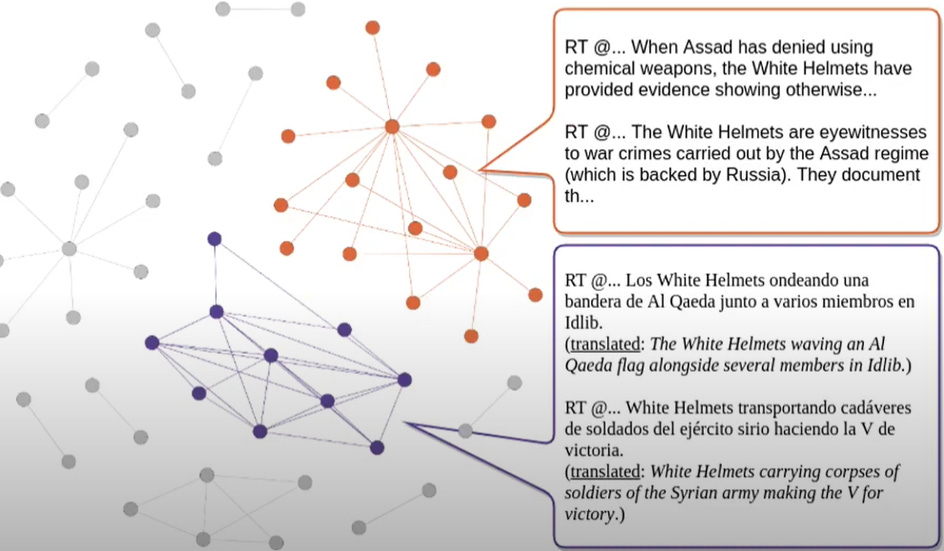

Geographical and Demographic Targeting: Analyze which regions or groups are being targeted to uncover potential geopolitical motivations. See real-world example of “Russian propaganda targeting Spanish-language users proliferates on social media”

Account Analysis: Look for patterns in fake accounts—generic bios, high activity volumes, or reliance on hacked information.

By combining these factors, analysts can uncover the motives and goals of disinformation campaigns. Reports like Meta’s periodic threat intelligence updates often highlight real-world examples and provide summaries of these activities.

The Power of Knowledge Bases and Cross-Referencing

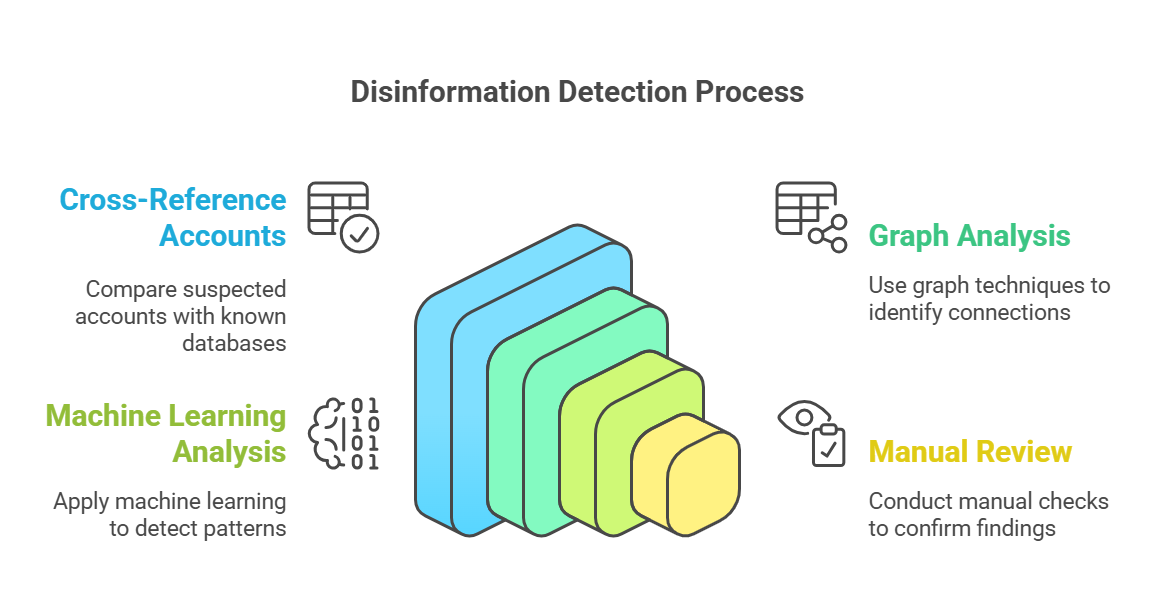

Another cornerstone of disinformation analysis is maintaining a curated knowledge base of known bad actors—state-affiliated accounts previously identified in influence operations. These databases are built using intelligence from various sources, such as OSINT investigations and social media platforms.

Cross-referencing suspected accounts against these databases helps uncover links between users, content, and activities. Techniques like graph analysis and machine learning can reveal connections, while manual reviews confirm findings.

However, this method has limitations. State-linked accounts frequently evolve, using sophisticated techniques to avoid detection or attribution. The effectiveness of this approach depends heavily on the accuracy and comprehensiveness of the knowledge base. State-linked accounts (or domains) are more challenging to attribute than state controlled accounts (or domains).

Why Context Matters More Than Falsehood

When analyzing disinformation, identifying “who” is behind a campaign is often more critical than simply determining whether a specific claim is true or false. Unlike misinformation—which focuses on fact-checking narratives—disinformation is about intent and outcomes. It’s about using narratives to influence public opinion, win elections, or even sway the outcomes of wars.

To gain this deeper understanding, OSINT analysts focus on key elements like:

“Who”: To understand “who,” OSINT analysts trace details like:

Account Origins: Is it a bot or human? Does it have ties to a state actor?

Behavioral Patterns: When was the account created? What’s its posting history?

Influence Measures: How many followers does it have? Who is it following?

“What”: Identifying the specific narratives being propagated. By examining coordinated activities, analysts can uncover the main messages and themes that are being pushed across platforms and accounts. This helps pinpoint the intent and focus of the campaign.

“When”: Timing is crucial. CIB analysis tracks the synchronization of disinformation efforts, mapping when specific narratives are introduced and how they evolve. Disinformation campaigns often coincide with significant events—like elections, protests, or geopolitical crises—giving analysts clues about their strategic objectives.

By combining these elements with a detailed understanding of the broader context, analysts can better assess the effectiveness of a disinformation campaign and its potential impact on public discourse.

Final Thoughts: Scaling Human Efforts with Better Tools

The fight against disinformation is both complex and ever-evolving, requiring a blend of robust tools and human expertise. Effective tools should prioritize simplicity and functionality, empowering analysts to trace back context, refine filters, and adapt quickly to emerging threats.

By examining real-world workflows and challenges, we can better understand what makes a tool truly valuable for OSINT practitioners. Whether it’s enhancing narrative detection, improving CIB analysis, or scaling efforts to counter state-sponsored campaigns, the end goal remains clear: to equip analysts with the resources they need to combat disinformation effectively and at scale.

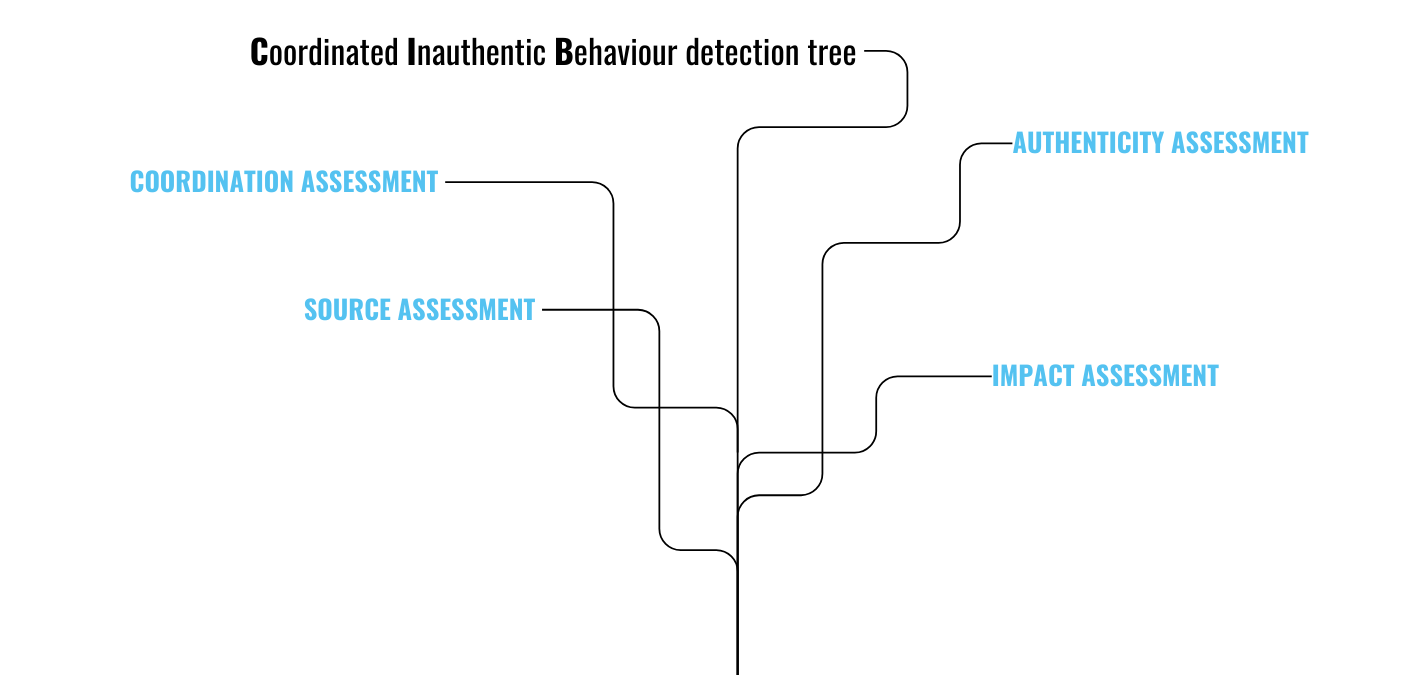

As disinformation tactics continue to evolve, staying informed and refining our approaches will be crucial. For those looking to dive deeper, Meta’s threat intelligence reports, such as the 2017-24 Adversarial Threat Report, offer insightful real-world case studies. Additionally, the EU Disinfo Lab’s recent report, “Coordinated Inauthentic Behavior Detection Tree” (availablehere), revisits their earlier work on detecting CIB while exploring the role of AI technologies in this space.

What are your thoughts? Have you encountered unique challenges or gained insights while tackling disinformation? Let’s continue the conversation.