Coordinated Sharing Behavior Detection Conference at Sheffield

conference key findings sharing with my own thoughts

This was an one-day #AoIR2024 satellite event recently organised bringing together some of the world's leading experts to showcase, discuss, and advance the state of the art in multimodal and cross-platform coordinated behavior detection.

Experts discussed challenges in identifying coordinated sharing, emphasizing the shift from content-based to behavior-based detection. Presentations highlighted the development of open-source tools and methodologies, including multimodal analysis and cross-platform approaches. Researchers addressed limitations such as inconsistent detection thresholds and data access restrictions, particularly concerning non-Western contexts and real-time analysis. The conference underscored the need for ongoing collaboration and adaptable methods to combat evolving disinformation tactics. Ethical implications and the impact of generative AI were also key discussion points.

Keynotes speech on Authenticity and Inauthenticity

Timothy Graham's keynote address, titled "The Inauthenticity Paradox," explored the complexities of authenticity and inauthenticity within social media. He argued that social media platforms benefit from user engagement, but they also profit from Coordinated Inauthentic Behavior (CIB) as it increases engagement metrics. Graham stated that authenticity, as defined by social media platforms, serves their strategic interests rather than representing an intrinsic value. He highlighted the paradox where platforms profit from both genuine engagement and CIB, suggesting that inauthenticity is ingrained in their design. This design provides users with affordances that influence their sharing behaviors, ultimately shaping the platforms' monetization strategies.

In brief, key findings by the keynote speaker through exploring the complex relationship between authenticity and inauthenticity, and social media platform:

Platforms Profit from Inauthenticity: While platforms benefit from user engagement, they also profit from CIB because it inflates engagement metrics. This creates a conflict of interest, as platforms have a financial incentive to allow some level of inauthentic behavior to persist.

Platform-Defined Authenticity Serves Strategic Interests: Graham argued that how social media platforms define "authenticity" primarily serves their strategic interests rather than reflecting any inherent meaning or value. This ambiguous definition makes it challenging for researchers to effectively detect and combat CIB.

Inauthenticity is Embedded in Platform Design: Graham suggested that inauthenticity is ingrained within the very design of social media platforms. This design provides users with affordances that shape their sharing behaviors, which, in turn, drive the platforms' monetization strategies

Key challenges discussed in this conference

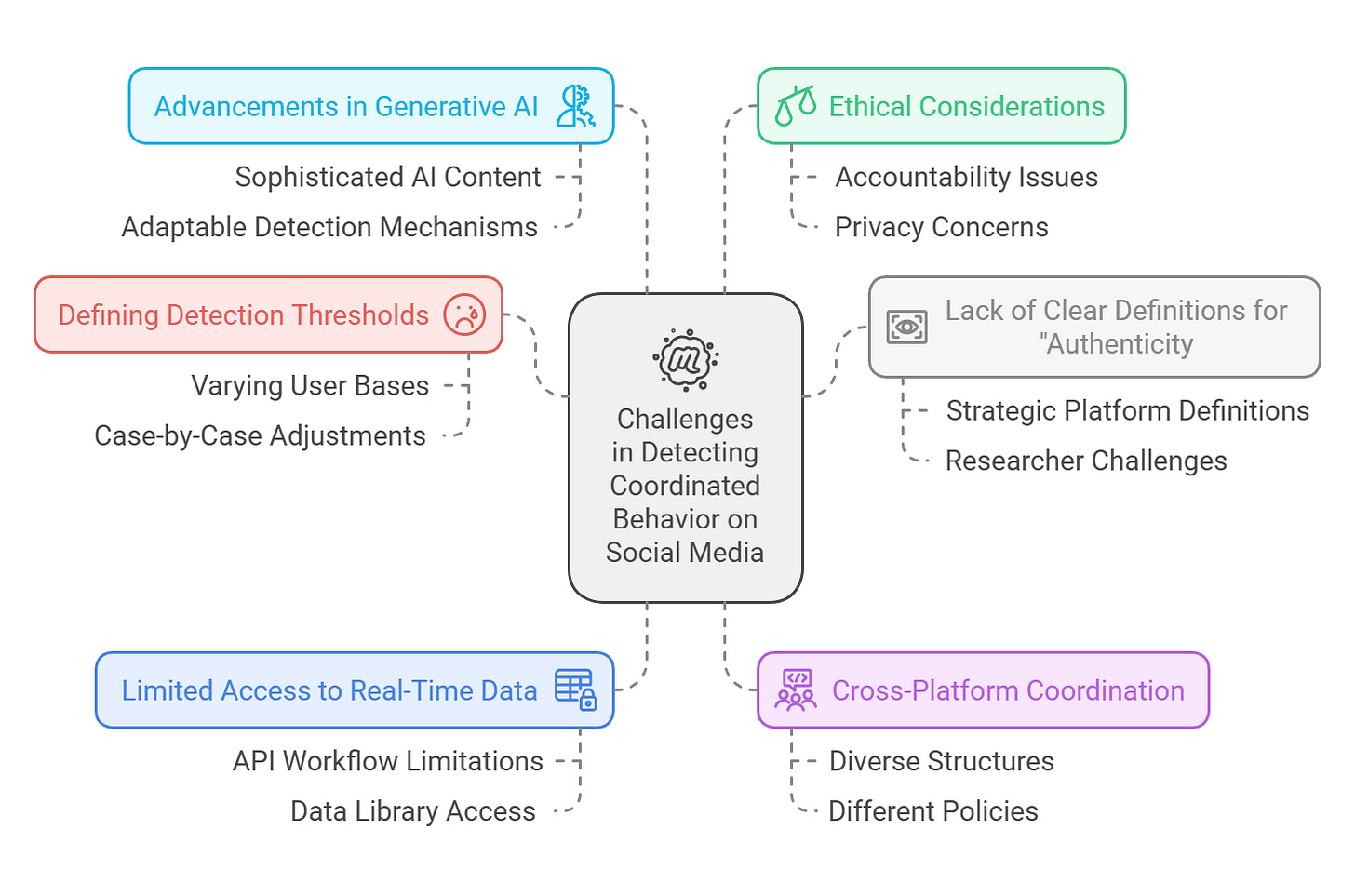

Several key challenges are discussed that hinder the effective detection of coordinated behavior on social media:

Defining Detection Thresholds: Establishing consistent thresholds for identifying coordinated behavior is difficult due to the varying user bases and content types across platforms. Researchers at the conference noted that fixed thresholds often prove inadequate, necessitating case-by-case adjustments, which complicates the use of traditional machine learning methods that rely on labeled datasets. A follow-up posts may discuss in detail about various key detection thresholds in computational CIB detection systems.

Lack of Clear Definitions for "Authenticity": Timothy Graham, in his keynote address, highlighted the ambiguity surrounding the concept of authenticity on social media34. He argued that platforms often define "authenticity" in ways that align with their strategic interests rather than reflecting any inherent meaning. This lack of clear definition poses significant challenges for researchers attempting to detect and distinguish coordinated inauthentic behavior.

Limited Access to Real-Time Data: Researchers encountered obstacles in accessing real-time data from social media platforms like Meta and TikTok. This restricted access hinders effective tracking and analysis of coordinated behavior, particularly regarding real-time API workflows and limitations imposed by platforms on data library access.

Cross-Platform Coordination: Detecting coordinated behavior across multiple platforms presents additional challenges due to the diverse structures, policies, and data formats of each platform. Researchers need to develop methods that can effectively track and analyze coordinated activities that span multiple online environments.

Advancements in Generative AI: The rapid evolution of generative AI technologies poses new challenges for coordinated behavior detection. As AI-generated content becomes more sophisticated, it becomes increasingly difficult to distinguish between genuine user activity and CIB. Researchers need to develop adaptable detection mechanisms to keep pace with these advancements.

Ethical Considerations: Detecting coordinated behavior raises ethical concerns, particularly regarding accountability and privacy. Researchers must ensure that their methods do not stifle legitimate expression or infringe upon user privacy. Balancing effective detection with ethical considerations is a significant challenge in this field.

Key frameworks and methodologies

Researchers presented in this conference reported various frameworks and methodologies to detect coordinated behavior on social media. These frameworks aim to address the complex and evolving nature of online coordination and account for the diverse challenges inherent in this research area.

Multimodal, Dynamic, and Time-Segmented Analyses

Recognizing the limitations of static approaches, researchers are emphasizing the importance of dynamic, multimodal, and time-segmented analyses. Static methods fail to capture the dynamic nature of coordinated networks, which evolve and adapt over time. By incorporating these dimensions, researchers can better understand how coordination patterns shift, identify subtle variations in behavior, and gain a more comprehensive understanding of coordinated activities. For instance, multimodal analysis allows researchers to examine coordination across various forms of content, such as text, images, and videos, providing a richer understanding of the phenomenon. However, researchers acknowledge that further research is needed to effectively utilize multimodality for distinguishing patterns in different contexts.

Multilayer Network Analysis

Moving beyond traditional community detection methods [Vargs 2020, Pacheco 2020], researchers discussed of turning to multilayer network analysis to investigate coordinated behavior.

This approach recognizes that coordinated activities often occur across multiple platforms, involving diverse actors and types of content. Multilayer networks integrate multiple modalities and time dimensions, providing a more nuanced and holistic view of coordinated behavior. They allow researchers to compare and contrast different forms of coordination, such as grassroots movements and orchestrated campaigns, and shed light on the evolving nature of online interactions.

Hierarchical Anomaly-Based Approach

Another framework gaining traction is the hierarchical anomaly-based approach. This method aims to overcome the limitations of existing approaches, such as interaction-based and similarity-based methods, which may struggle to identify independently active accounts involved in coordinated behavior. By analyzing anomalies at individual, pairwise, and group levels, this approach can detect coordinated activities without relying on predefined thresholds, making it more adaptable to different contexts and less prone to false positives. This framework also considers factors like behavioral frequency, effort, and temporal patterns, providing a more comprehensive assessment of coordinated behavior.

Cross-Platform Coordination Detection

Researchers are also developing frameworks to specifically address the challenges of cross-platform coordination. Given the distinct structures and policies of different platforms, tracking coordinated activities across multiple platforms is a complex task. One approach involves using URLs as universal identifiers to trace the flow of information between platforms. This method can reveal patterns of coordinated link sharing and highlight key connections between platforms where coordinated activities are concentrated. For instance, research suggests that Facebook and Telegram exhibit significant coordinated activity.

Knowledge Graphs for CIB Detection

Knowledge graphs offer a promising framework for detecting CIB, particularly in the context of high-stakes events like elections. These graphs pre-encode data and establish relationships between different elements, allowing researchers to analyze structured information about actors, events, and narratives. This approach can facilitate the identification of coordinated efforts to manipulate public discourse and spread disinformation.

The frameworks discussed above represent significant advancements in the field of coordinated behavior detection. They demonstrate a growing understanding of the complex and multifaceted nature of online coordination and a commitment to developing more sophisticated and effective methods for detection and analysis. However, various presentations also highlight the ongoing challenges researchers face, including limited data access and the need to balance effective detection with ethical considerations.

Key advancement presented in this conference

This conference report summarizes several advancements in automatic CIB detection techniques.

Dynamic, Multimodal, and Time-Segmented Analyses: Researchers emphasized that static methods are inadequate for detecting CIB. Instead, they advocated for dynamic, multimodal, and time-segmented analyses to capture network shifts and nuances across various forms of coordination.

Open-Source Toolkits: The conference highlighted the development of open-source toolkits for CIB detection. One example is the 'coorsim' package, which employs an embedding-based approach to detect multimodal coordinated behaviors, particularly in deceptive or manipulative content. It addresses the need for cross-lingual detection and analysis of non-textual modalities, which are often overlooked in CIB research.

Multimodal Embeddings: A six-step framework was introduced to integrate multimodal analysis, enabling the detection of "similitude" (i.e., co-similarity) in CIB across text, images, and videos. This framework leverages advanced models like Sentence-BERT, visual transformers, and CLIP. While promising, this approach faces challenges such as limited task-specific datasets, high computational demands, and complexities in integrating multimodal data.

Multilayer Network Analysis: Traditional community detection methods, like the Louvain method, may not effectively reveal overlapping communities. To address this, researchers suggested using multilayer networks that integrate multiple modalities and time dimensions. This approach provides a more comprehensive understanding of coordinated behaviors across various scales.

Hierarchical Anomaly-Based Approach: A hierarchical anomaly-based approach was proposed to overcome the limitations of interaction-based and similarity-based methods. This approach analyzes anomalies at individual, pairwise, and group levels without predefined thresholds, considering behavioral frequency, effort, and temporal patterns.

Platform- and Content-Independent Tools: Researchers presented CooRTweet, an R tool designed for platform- and content-independent coordination detection. It allows for the analysis of multimodal and cross-platform data using a flexible threshold approach, making it adaptable to various online environments.

Cross-Platform Coordination via URL Tracking: A study explored cross-platform coordinated link-sharing behaviors by using URLs as universal identifiers. This research revealed that information flow between platforms often revolves around a few primary connections, particularly between Facebook and Telegram.

The conference also highlighted the challenges of CIB detection:

Defining Detection Thresholds: Setting consistent detection thresholds is difficult due to the diversity of user bases and content types across platforms.

Limited Access to Real-Time Data: Researchers faced obstacles in accessing real-time data from platforms like Meta and TikTok, which hindered their ability to track and analyze CIB effectively.

Overall, the conference demonstrated significant progress in automatic CIB detection techniques, particularly in incorporating multimodal analysis and developing open-source tools. However, challenges remain, emphasizing the need for ongoing collaboration and improved data access.

Summary

This academic conference offers an in-depth exploration of methodologies and frameworks for detecting disinformation and Coordinated Inauthentic Behavior (CIB). However, it falls short in addressing several critical topics that directly tackle the practical challenges faced in real-world OSINT (Open Source Intelligence) applications. As these challenges grow increasingly significant for practitioners and industry stakeholders, their absence from the discussion highlights a crucial gap in bridging theory with practical implementation.

Data Access and Platform Cooperation

The conference discusses limitations in data access from platforms like Meta and TikTok. This suggests that researchers often face hurdles in obtaining the necessary data to conduct comprehensive analyses.

This challenge is likely/arguably alleviated in practice and industry, where access to real-time data and platform APIs is crucial and accessible for effective monitoring and detection. Example list of popular data providers/aggregators widely adopted in industry and OSINT communities are Brandwatch, Meta Content Library (prev. CrowdTangle), Sprinklr, Pyrra, SocialGist, Meltwater, Hootsuite Insights, etc.

It is worth highlighting the increasing difficulties academics face in recent years in compiling comprehensive open-source datasets for researching coordinated inauthentic behavior (CIB). This difficulty stems from a few key challenges:

Gathering large-scale, cross-platform data (multimodality) from both inauthentic and authentic campaigns1. This task involves collecting data from various social media platforms, each with its own structure and policies, making the process technically complex.

Limited access to data platforms comparable to industry resources. Researchers often face restrictions in accessing data through APIs and other means, hindering their ability to gather data on a scale similar to industry researchers. Paid Social media analytics platforms as industry-standard tools and data infrastructure like Brandwatch, are not available to academic researchers. Moreover, due to various factors, platforms do not incline to share data for academic research. X (formerly Twitter) has abandoned free tier of its data API and banned data scraping & crawling,

These challenges directly impact the ability of academics to create and contribute new open-source datasets, which are essential for advancing research and development of new methodologies for studying CIB.

The data constraints was discussed by researchers in this conference:

The Need for Cross-Platform Data: The sources emphasize the importance of cross-platform analysis for understanding coordinated behavior. Researchers like Jakob Kristensen use URLs as universal identifiers to track information flow between platforms like Facebook and Telegram. This highlights the need for datasets that encompass data from multiple platforms to capture the full scope of coordinated activities.

Data Access Limitations: multiple presenters repeatedly mention the challenges posed by restricted data access. For example, Fabio Giglietto and Giada Marino's research was hampered by Meta's limited data library access and TikTok's inconsistent API functionality. Similarly, Jennifer Stromer-Galley's project faced difficulties due to the challenges of obtaining grants for misinformation research in the U.S. and relied on a grant from a knowledge graph company to access the necessary data.

Overcoming these obstacles requires a collaborative effort between researchers, social media platforms, and funding agencies to facilitate data access, promote data sharing initiatives, and provide resources that enable academics to conduct research on par with industry capabilities. Btw, It's encouraging to see some Meta's initiatives, such as the Content Library and API, which provide researchers with access to extensive, publicly available content from Facebook and Instagram.

Policy support

Understanding researcher access to data is crucial for gaining insights into online platforms. The EU is working on measures to facilitate this. Recently, a joint submission from the UCD Centre for Digital Policy and DCU FuJo (EDMO Ireland coordinator) is published.

Key Points of the DSA Article 40:

Enables researchers to study and assess risks and mitigation measures on Very Large Online Platforms and Search Engines.

Defines procedures, data formats, documentation, and establishes a DSA data access portal for streamlined data sharing.

Evolving Tactics, industry framework and Adaptability

Academics highlighted the dynamic nature of coordinated behavior and the need for adaptive detection methods. Disinformation actors are constantly evolving their tactics, making it challenging to develop and maintain effective detection mechanisms. This challenge is particularly relevant in practice and industry, where real-time monitoring and rapid response are crucial to mitigate the spread of disinformation. From this perspective, widely adopted industry frameworks and standards of Tactics, Techniques and Procedures (TTP), by industry practitioners to analyse and map threat intelligence seem not covered such as DISARM Framework, Meta's Online Operations Kill Chain, etc.

The DISARM framework or the DISinformation Analysis & Risk Management is an open-source framework designed for describing and understanding the behavioural parts of disinformation/FIMI. It sets out best practices for fighting such activities through sharing data & analysis, and can inform effective action. The Framework has been developed, drawing on global cybersecurity best practices2. Since 2023, The DISARM Framework has been formally adopted by the European Union and the United States as part of a “common standard for exchanging structured threat information on Foreign Information Manipulation and Interference” at the Trade and Technology Council meeting.

Meta’s Cyber Kill Chain is used by Meta security team to Compare online operations, Spot weaknesses, and Identify the most essential possibilities for disruption. Threat intelligence involves analyzing evidence-based information about cyber attacks. This information helps cyber security experts identify issues and create targeted solutions. Similar to DISARM, this new model is designed to be adaptable to multiple types of online threats, especially those with human targets.

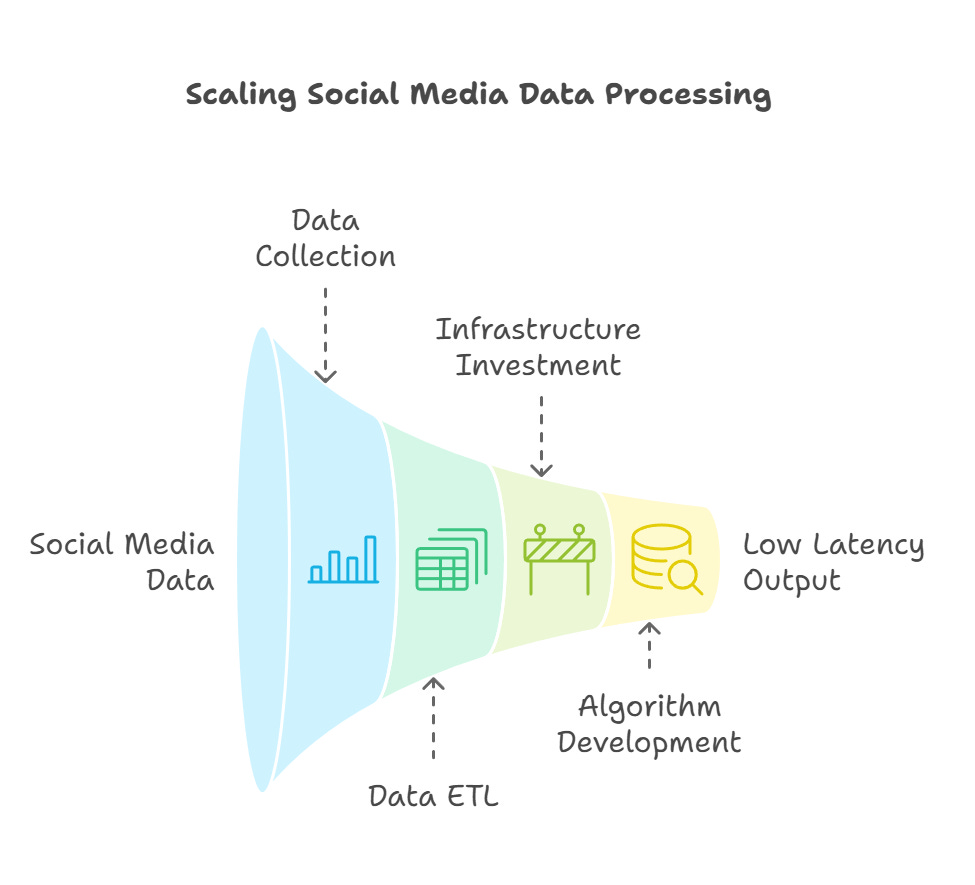

Scalability and Resource Constraints

Several of the presented frameworks and tools, such as the multimodal analysis techniques, require significant computational resources. Scaling these methods to handle the massive volume of data generated on social media platforms can be a significant challenge in practice and industry in terms of large scale data collection, data ETL and ensuring low or acceptable latency of system output. From a production and commercial perspective, Organizations need to invest in robust infrastructure and develop efficient algorithms to ensure that detection mechanisms can keep pace with the volume and velocity of online activity.

Ethical Considerations and Transparency

The conference briefly mentioned the ethical implications of detection methods. In practice and industry, implementing these methods raises important ethical considerations regarding privacy, freedom of expression, and potential biases in algorithms. Striking a balance between effective detection and respecting user rights is crucial. Industry actors need to establish transparent processes and guidelines for disinformation detection and mitigation, ensuring accountability and public trust. This suggests that optimal methodology in practice for large scale and systematic CIB monitoring and detection requires the consideration of these practical constraints

Defining and Addressing Emerging Harm

While the conference focused on some key technical aspects of commonly known harmful behavior detection, it's important to note that the ultimate goal of these efforts is to mitigate the harmful effects of ever-changing disinformation and CIB tactics. Defining and measuring harm in a nuanced way is a complex challenge in practice and industry. Developing effective strategies for countering disinformation and mitigating its impact requires a multi-faceted approach that goes beyond simply identifying coordinated behavior. It may involve fact-checking, promoting media literacy, and collaborating with policymakers to address the root causes of disinformation.

Many key topics have emerged and mostly concerned by policy makers, OSINT communities and social platforms are missing typically like Foreign Information Manipulation and Interference (FIMI) particularly in presidential election monitoring. For instance, New amendments to the Online Safety Bill in the UK force social platforms to summarise the results of their most recent risk assessments on illegal content and child safety in their terms of service. Social platforms are accountable for how well they address illegal content such as state-backed disinformation. Since 2024, the UK has introduced a new National Security bill of Foreign interference.

The emerging requirement of monitoring and detecting FIMI and CIB is indeed becoming increasingly important. The European External Action Service (EEAS) has identified this as a significant threat, particularly in the context of elections and international relations. For example, The 2nd EEAS investigated 750 FIMI incidents between December 2022 and November 2023, nearly doubling their detection and analysis capacity compared to the previous year. Ukraine was the primary target of FIMI activities, with 160 recorded cases, followed by the United States (58 cases), Poland (33), Germany (31), France (25), and Serbia (23). FIMI activities often capitalize on existing attention around significant events, such as elections, highlighting the need for increased vigilance during such periods. The EEAS has developed a FIMI Toolbox to effectively address this threat that equip analytical teams with tools for real-time monitoring, focusing on recognizing both general and country-specific election-related FIMI. The FIMI Toolbox also implemented a common framework and methodology to systematically collect evidence on FIMI activity and encourages collaboration between international partners, civil society organizations, and other stakeholders to create a more networked and connected collective response.

Thus, the increasing importance of monitoring and detecting foreign state-backed CIB reflects the growing sophistication of information manipulation tactics and their potential impact on democratic processes and international relations.

See general practice of how to collect data for computational CIB detection in https://dl.acm.org/doi/10.1145/3411495.3421363

https://www.eeas.europa.eu/sites/default/files/documents/2023/Annex%203%20-%20FIMI_29%20May.docx.pdf