Bridging the Gap: How the DISARM Framework is Shaping the Fight Against Disinformation

a brief intro of DISARM Framework

You can listen to podcast converted from this blog on Spotify.

Let’s talk about something that’s been mentioned: the disconnect between academic research and industry practices when it comes to tackling disinformation. If you’ve been following my work, you’ll know I’ve touched on this before (check out this post for more). Today, I want to dive into one of the most promising tools in this space—the DISARM Framework—and why it’s becoming a game-changer for disinformation investigations.

The Big Picture: Frameworks and Standards

Over the past few years, the cybersecurity field has seen a surge in frameworks designed to combat threats. You’ve probably heard of MITRE ATT&CK, The RICHDATA Framework (by CSET georgetown uni.), Diamond Model of intrusion analysis, Cyber Kill Chain, The unified kill chain, The Pyramid of Pain, and DISARM Framework (prev. AMITT). These frameworks are built around Tactics, Techniques, and Procedures (TTPs)—basically, the playbook bad actors use to execute their campaigns. Each framework offers a unique perspective and can be used as a guide for understanding and combating disinformation campaigns. A latest one in 2023 is the The Online Operations Kill Chain developed by Carnegie Endowment for International Peace, a nonpartisan international affairs think tank headquartered in Washington D.C and founded in 1910. There are also many initiatives. For example, DIGI Disinformation Code (Australian Code of Practice on Disinfo and misinfo) is signed by top companies e.g., META, Google, Microsoft, etc. An EU disinformation Code of Practice has been signed by 34 signatories and presented on 2022

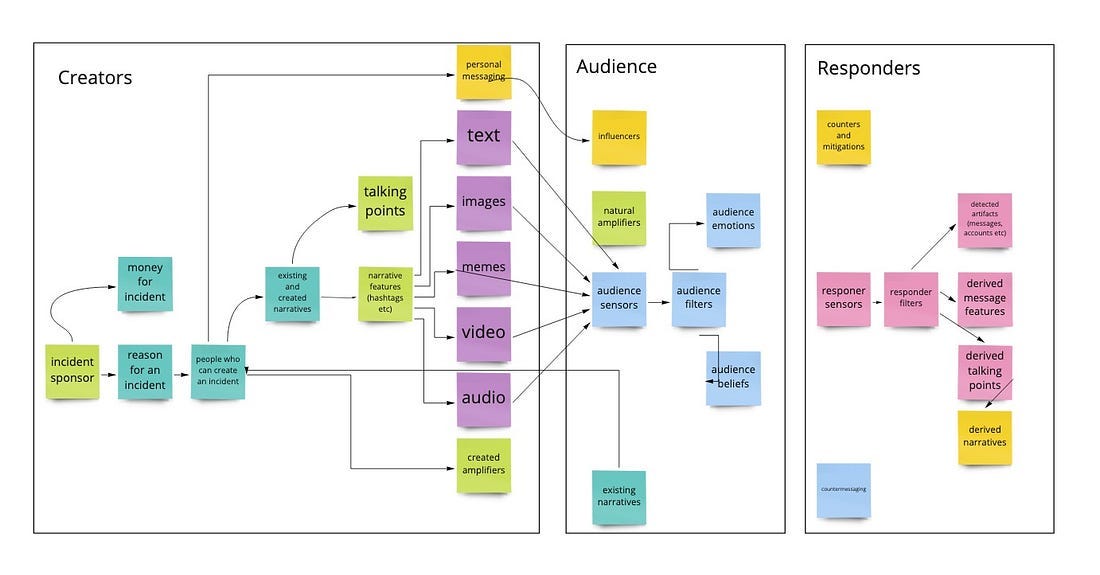

But here’s the thing: disinformation is a different beast. It’s not just about hacking systems; it’s about manipulating minds. That’s where DISARM comes in. Developed by the Credibility Coalition’s MisinfoSec Working Group, DISARM is an open-source framework specifically designed to tackle disinformation campaigns.

The Basics of TTPs

Alright, let’s talk about TTPs—short for Tactics, Techniques, and Procedures. If you’re in the cybersecurity or disinformation space, you’ve probably heard this term thrown around. But what does it actually mean? Let’s unpack it in plain English.

TTPs are essentially the playbook that bad actors use to carry out their attacks. Think of it as a three-tiered system:

Tactics: These are the high-level of observed behavior. For example, “targeted engagement” is a tactic where the goal is to get specific content in front of a specific audience.

Techniques: Each tactic is broken down into a number of more specific techniques. Using the same example, a technique might be “posting to reach a specific audience”.

Procedures: Each technique is broken down into the most granular level of procedures. These are the nitty-gritty steps taken to execute the techniques. So, in this case, the procedure could be “posting hashtags” to amplify the content.

TTPs in the Disinformation World

When it comes to disinformation, TTPs take on a slightly different flavor. Here, they refer to the methods and approaches used by disinformation actors to spread their campaigns. Let’s break it down with an example:

Tactic: Targeted engagement (getting content in front of a specific group).

Technique: Posting to reach a specific audience.

Procedure: Posting hashtags to make the content more discoverable.

But it doesn’t stop there. Targeted engagement can take many forms—like advertising, mentioning or replying to a target account, or even spear-phishing (a fancy term for tricking someone into giving up sensitive info e.g., credentials) or trick them into becoming part of the operation. It’s all about finding ways to plant content where it’ll have the most impact.

Why does this matter ?

Understanding TTPs is crucial because it helps us anticipate and disrupt disinformation campaigns. By knowing the tactics, techniques, and procedures that bad actors use, we can develop better strategies to counter them.

What’s DISARM All About?

DISARM stands for Detect, Identify, Situational Awareness, Attribute, Remediate, Measure. It’s split into two main frameworks:

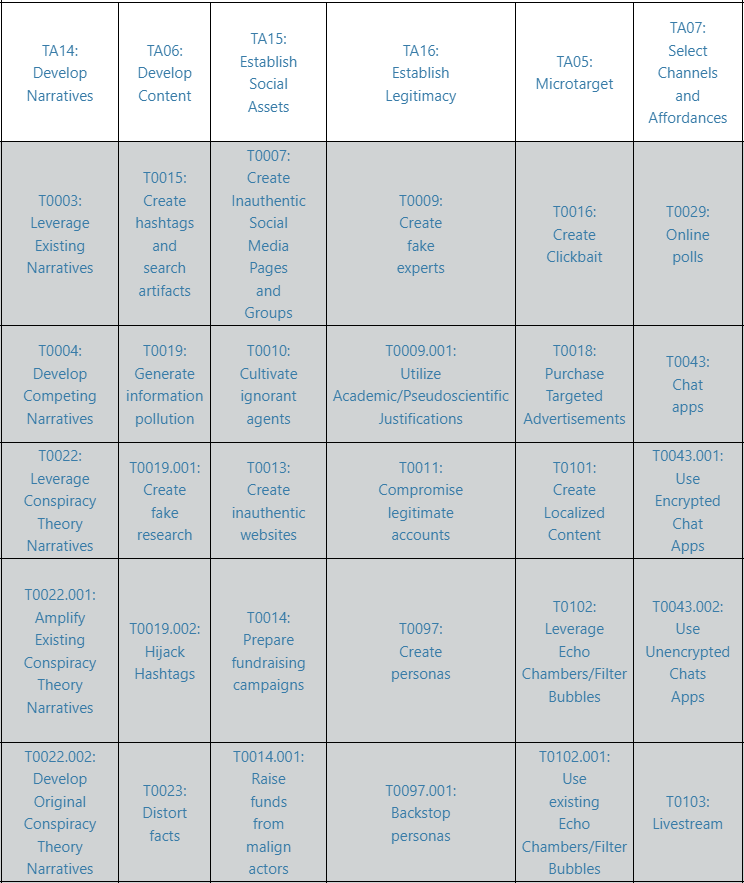

DISARM Red: Focuses on the behaviors of those creating disinformation.

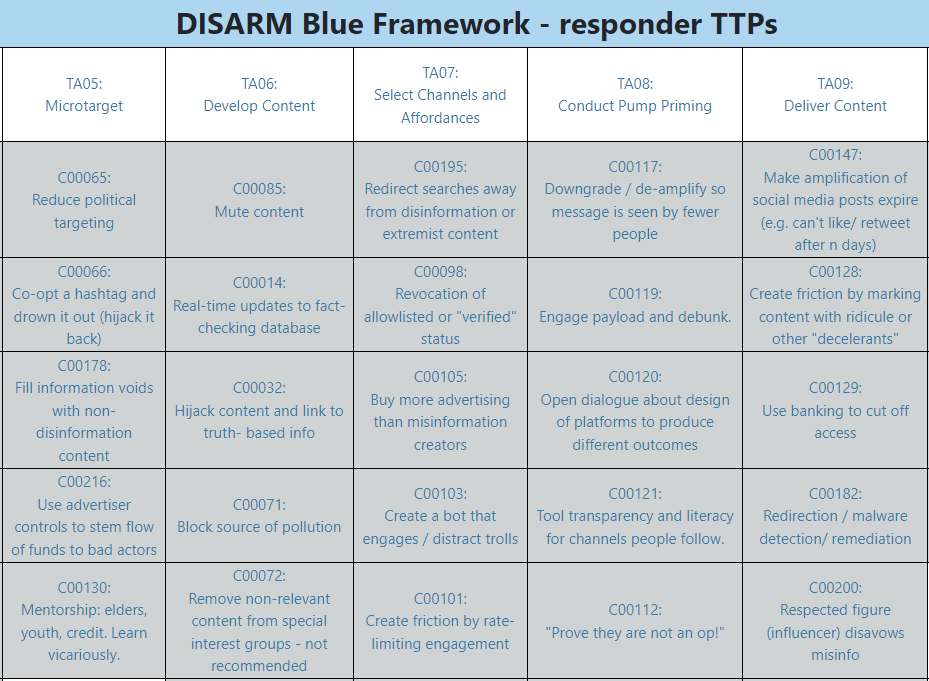

DISARM Blue: Outlines potential responses to disinformation.

Unlike other frameworks that stay in the theoretical realm, DISARM is all about action. It breaks down disinformation campaigns into stages—planning, preparing, executing, and assessing—allowing analysts to disrupt them at every step.

The Kill Chain Approach

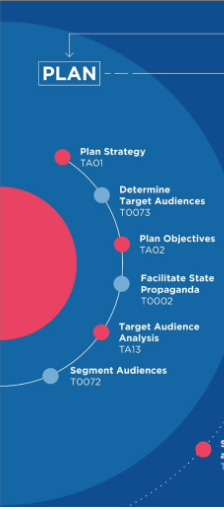

DISARM uses a kill chain model to dissect disinformation campaigns. Here’s how it works:

Planning: The attacker sets their goals and scouts the information landscape.

Preparing: They set up assets like fake accounts or websites.

Executing: They seed and amplify content to reach their target audience.

Assessing: They measure the impact and adjust their strategy.

By breaking it down this way, DISARM gives us a clear roadmap for disrupting campaigns at every stage.

In the planning phase, the attacker envisions and designs the desired outcome

Tactics include reconnoitering the information environment and establishing goals.

In the preparing phase, the attacker lays the groundwork to execute the plan

Tactics include selecting and establishing assets like websites and accounts.

In the executing phase, the activities are carried out via the established assets.

Tactics include seeding and amplifying content.

In the assessing phase, the attacker evaluates the effects and impact of the campaign

Tactics include measuring engagement and monitoring reactions.

Why DISARM Matters

Here’s the kicker: DISARM isn’t just another tool in the toolbox. It’s a standardized approach that’s already being used in real-world scenarios. Take the EEAS 2023 report, for example. It highlights how DISARM was applied to analyze disinformation campaigns around China's FIMI in “Russian aggression in Ukraine”. By mapping out tactics like developing image-based content or amplifying narratives, DISARM helps us understand the “behavioral fingerprint” of these campaigns.

By Combinating TTPs and their frequency in Russian aggression incidents, we can find that:

The development of image-based and video-based content were the two most recurrent techniques employed

the amplification of the content happened through cross-posting across groups and platforms to new communities within the target audiences or to new target audiences

Based on the profiling/analysis of individual occurrences from real-world disinfo incidence, we can uncover which combinations of certain TTPs occur more often together

By Categorsing disinfo incidents with TTPs used in the context of the Russian invasion of Ukraine across three behavior theme, we can reveal that the use of visual content (videos and images) is a prominent feature of Russian information operations in the Ukraine conflict. Additionally, discrediting credible sources and manipulating narratives appear to be key tactics employed against public officials.

Collaboration is Key

One of the coolest things about DISARM is how it fosters collaboration. It provides a shared taxonomy and common data format for sharing threat information. This is crucial for scaling up efforts to counter disinformation globally.

A common data format for sharing threat information is foundational for networked collaboration at scale

Facilitating Response (DISARM Blue)

As revealed in EEAS 2023 report in the Russian invasion of Ukraine:

Countermeasures for 18 incidents where recorded

80% of the incidents did not trigger any type of response

The most common countermeasure were statements of refutal (via debucking or fact-checking of claims of incidents)

Five Types of Disinformation Response

This article showcases five main approaches (resource-based, artifact-based, narrative-based, volume-based, and resilience-based) to countering disinformation with DISARM framework, each with its own strength and weaknesses.

Takeaway: The best approach to use will depend on the specific situation

Real-World Applications

Another case study involves inauthentic accounts linked to the CCP spreading disinformation about Australian politics.

Amplifying negative narratives and are co-opting tweet text posted by real users in good faith

Bad actors also targets Australian foreign policy decisions such as #AUKUS, the US alliance, Australia’s relationship with the UK, and discussions about China-relevant policies and decisions. Real Australians are unknowingly promoting and engaging with these posts.

>70 new accounts impersonating @ASPI_org official Twitter account. Many of these fake accounts start by tweeting real ASPI content, before posting misleading content.

Other civil society organisations and individuals are also being targeted with similar tactics.

By mapping these campaigns to DISARM’s TTPs, analysts were able to uncover coordinated efforts to undermine trust in democratic institutions.

The Road Ahead

As the UK Parliament Committee notes submitted by DISARM Foundation (pdf), there’s no “silver bullet” for countering disinformation. But frameworks like DISARM give us a fighting chance. By combining education, preemptive efforts, and targeted responses, we can build resilience against these threats.

Reference

Strategic Communications, 1st EEAS Report on Foreign Information Manipulation & Interference (FIMI) Threats - Towards a framework for networked defence, Feb 2023, 36pp, access via pdf (6.7MB)

Strategic Communications, 2nd EEAS Report on Foreign Information Manipulation and Interference Threats, 01 2024, access via https://www.eeas.europa.eu/eeas/2nd-eeas-report-foreign-information-manipulation-and-interference-threats_en

Nimmo, B., Hutchins E. (2023), Phase-based Tactical Analysis of Online Operations, Carnegie Endowment For International Peace

Nelson D., (2022), Five Types of Disinformation Response, “DISARM Disinformation” Medium

UK Parliament Committee, “Written evidence submitted by the DISARM Foundation”, meeting notes, access via https://committees.parliament.uk/writtenevidence/111495/pdf/